Thank you for the bios

I bought 2 m.2 samsung drives

I managed to install win 10 on one of them

Win shows both drives

How do I make raid 0 ?

My only option is when installing windows 10 to load raid drivers? Wich one ? Intel rst?

Thanks

You can’t.

X79 doesn’t have NVMe RAID needed for pre-Windows installation.

You can run software RAID0 if you don’t have OS installed on NVMe drives (since driver isn’t needed at that point).

Thanks to @agentx007 for the BIOSes, and for @Lost_N_BIOS for the bifurcation mod!

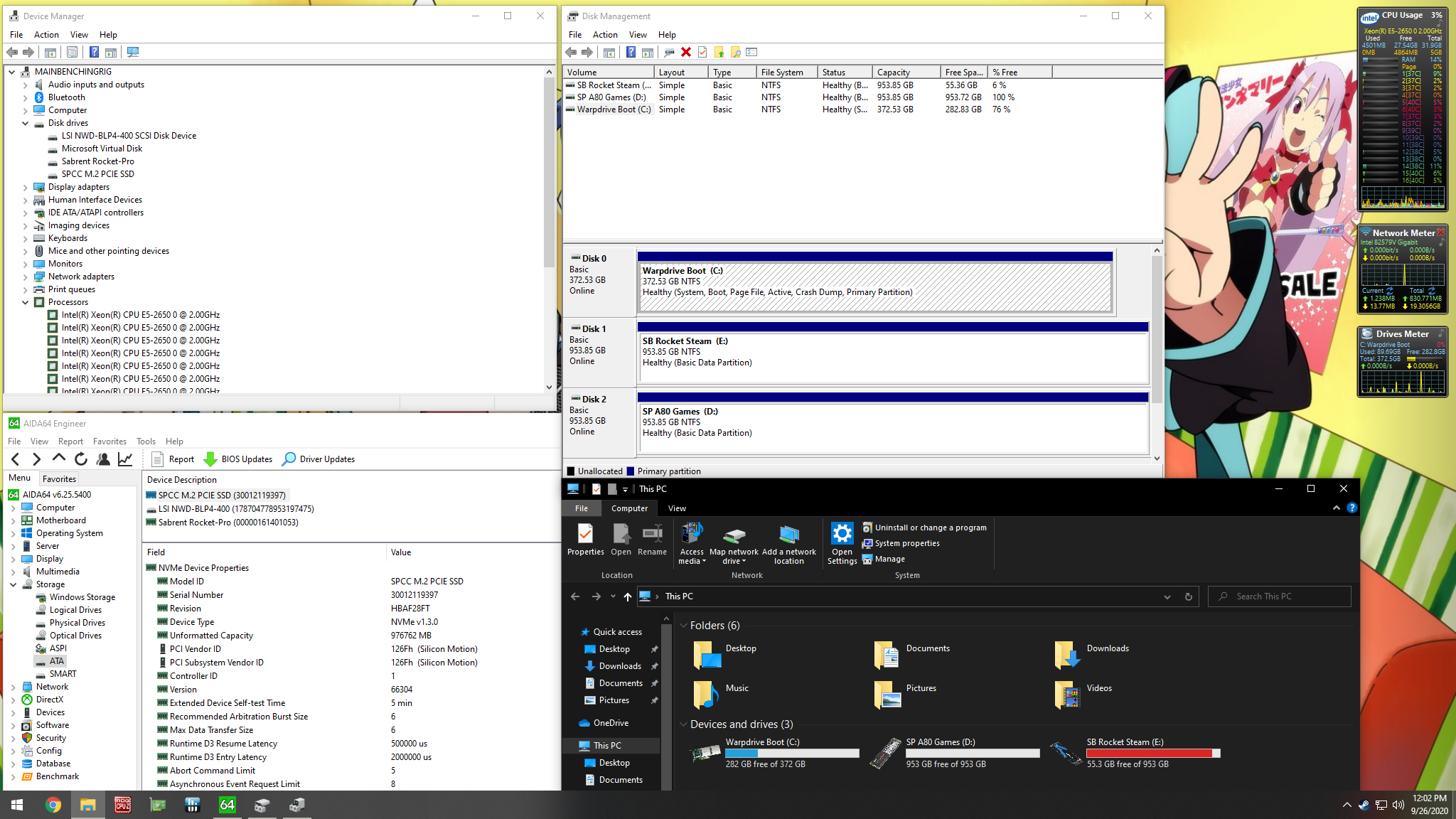

Spent a good couple hours banging my head on this. My current (now previous) BIOS had the following stuff:

RST Ver 15.1.0.2545 (How i made that work, i don’t know)

NVMe support (v3 i think)

LAN Ver 0.0.19 / 0.1.13

2018 microcode updates

Then, i tried to take the Version A with Bifurcation, and tried to slap the following updates

RST Ver 15.1.0.2545 (to keep the same mess i had before)

NVMe support (v4, same as included)

LAN Ver 0.0.29 / 0.1.16

2019/2020 microcode updates

And whenever i did this, the board just refused to boot (showing either code 00 or 19). After some trial and error, i found out that updating RST took everything down for some ungodly reason, so i left it at v13.1.0.2126. I was able to update LAN and uCode, and it POSTed just fine. It was also able to boot from my 960 Pro on slot 2 (the x8 one) without issues, just as before. Also, i now see the bifurcation options in the BIOS (along with a mountain of other options that weren’t there before).

Now, i have a couple questions.

- How could i add RSTe to this BIOS? I cannot seem to be able to update much of anything storage-related without soft-bricking the board (thank goodness for true dual BIOS and BIOS Flashback). I’d like to have RSTe v5.4.0.1039 support on it (if possible, if not then v4.7.0.1017), along with RST v13.1.

- I know the RIVBE has 3 ASM1061 SATA controllers, but so far, none of the BIOSes i have (even stock ones from ASUS website) include any extra FW for them, but the RIVE BIOSes do. Are those not really needed on the RIVBE? Or does the RIVE just use different chips?

EDIT: Uploaded my BIOS mod.

R4BE-Mod-VerB-Bifurc.zip (4.93 MB)

Randomly got my hands on a RIVBE… Saw this amazing thread. So should I do VerA+Bifurc or VerB+Bifurc? @Lost_N_BIOS at it again I see… Hopefully the X79-Deluxe modding helped you on this board ![]()

Unless you’re into installing random ES/QS CPUs, there is no benefit to the extra microcode.

Link isn’t working for me, can anyone else confirm?

EDIT:: NVM, appears all of TinyUpload is down right now.

@Xenophobian - Yes, tinypload has been down for a week+ - hopefully they come back soon!

Mirror added at original post

Well, i can confirm this works, at least as well as expected. No matter which slot i use, i can never see more than two SSDs, and depending on the options, i see slots 1&3, 1&4, 2&3 and 2&4. Despite that, it does work. Hopefully some day this will be fully usable with all 4 SSDs in a single x16 slot.

Looks like an active R4BE thread, maybe I can derail it for a moment since finding people with this board isn’t easy. Long story short : I’ve got a rampage IV black edition that had a bad iROG chip, replaced the chip but now need to load firmware onto it. The iROG chip firmware is not available anywhere online, but it is possible (and easy) to dump from a working board.

The process is simple.

0) Power off system completely (atx supply unplugged)

1) Locate the DEBUG jumper (2*4 pins) near the CE marking between the lowest two pcieX16 slots. Pin 1 is towards the rear (i/o side) bottom (away from the cpu) and is marked on the pcb. Pin ordering is:

2 4 6 8

1 3 5 7

2) Connect a jumper across pins 5 and 7. This will enable bootloader mode on the embedded controller. The board will not function normally when in bootloader mode, thats ok.

3) Connect RX and TX from a 3.3v USB UART to pins 1 and 3. 1=TxD, 3=RxD

4) Connect the USB UART to another computer.

5) Turn on the ATX power supply. The embedded controller works off of 3.3v standby (derived from 5vsb), so the board doesn’t really nee to be ‘on’ in the normal sense for this to work.

6) Run the application (found on some Russian board repair forum) here and press the blue dump button. Provide a filename and after a minute or so you’ll have a dump of the embedded controller firmware.

https://drive.google.com/file/d/1exVNC-c…iew?usp=sharing

7) Unplug the ATX power supply and remove the jumper between pins 5 and 7. Your board will be back to normal once powercycled.

Much appreciated! If anyone is willing to do this and needs some help, happy to do a videocall.

thanks,

cirthix

Hi, first of all thanks to @agentx007 and @Lost_N_BIOS for their BIOS mod. That is what I was looking for without upgrading all my PC. I really like this motherboard.

I am trying to bifurcate the PCIE slots for using an ASUS HYPER M.2 x16 that could use 4 NMVe drives. In my current configuration I am using 2 Samsung EVO NVMe drives and I had to connect the NMVe drives in slots 1 and 3 of the HYPER M.2 card and this card is connected in PCIE slot PCIE_X16/X8_3 (based on instruction manual, which is the 3rd PCIE counting from the nearest to the CPU). In this case it seems that PCIE_X16/X8_3 appears in the bifuracation menu of the BIOS as "IOU2 - PCIe Port". I have set up as x4x4x4x4 and I don´t know why connecting the drives to the HYPER M.2 slots 1 and 2 does not recognize any drives, but connecting to slots 1 and 3 detects both. I would like in the future to use the 4 NVMe slots and the slots 2 and 4 should be working with bifurcation x4x4x4x4 in I am not wrong.

On the other hand, from bifuraction BIOS menu it shows "IOU1 PCIe Port" as a PCIE X8 slot and "IOU2 PCIe Port and IOU3 PCIe Port" as the two PCIE X16 slots. So is there a 1 port missing for configuring? or the remaing X8 slot is not able to be bifurcated?

To listing the ports as defined in the motherboard and showed in BIOS/Advanced/System Agent Configuration/IOH Configuration:

PCIE_X16_1 → IOU3 PCIe Port (x8x8 , x8x4x4, x4x4x8, x4x4x4x4)

PCIE_X8_2 → IOU1 PCIe Port (x4x4) ??? / Fixed to X8

PCIE_X16/X8_3 → IOU2 PCIe Port (x8x8 , x8x4x4, x4x4x8, x4x4x4x4) in case X16 / (x4x4) in case X8 when all PCIE slots are used

PCIE_X8_4 → IOU1 PCIe Port (x4x4) ??? / Fixed to X8

Which one wil be correct for IOU1 PCIe Port?

Will be using the HYPER M.2 with the NVMe drives, in slots 1 and 2, works inserted in PCIE_X16_1 bifurcated to x4x4x4x4 instead of the GPU card? (Just for allowing to use the 2 remaining NVMe ports of the HYPER M.2)

My final ideal setup for PCIE slots will be:

- x1 ASUS HYPER M.2 x16 with 4 NVMe drives (ideally if works in PCIE_x16_1?)

- x2 PCIE x4 SSD drives with biffurcation in BIOS (when clarified whith PCIE_X8 slot is) and with bifurcation riser cables and slots (ideally using PCIE_X8_2). The SSD drives are ASUS RAIDR

- x1 Full HD HDMI Avermedia capture card (ideally using PCIEX1_1 2.0)

- x2 ASUS GTX TITAN BLACK with SLI running at X8. (ideally, for using the rest of the setup, using the PCIE_X16/X8_3 and PCIE_X8_4 that both will be at X8)

Thanks in advance for your help and your advices.

@euchloedtj

Funny story im trying to do something similar at the exact same time !! I want to bifurcate the last 2 slots the PCIE_x8/16 and the PCIEx8 and it just wont split the lanes if the last slot (4th) x8 is connected at all. (the 3rd slot is x16 and splittable when the 4th isnt connected, when the 4th is connected both become x8 and unable to split the 3rd one) so to my understanding there is a PLX chip onboard that handles that splitting automaticaly and prevents us from bifurcating the last 2 slots unless we render the 4th useless. @Lost_N_BIOS any insight or help to get around this would be amazing (p.s. thank you for your amazing work this is my main virtualized system and it wouldnt be possible without the modded bioses)

Edit by Fernando: Unneeded fully quoted post replaced by directly addressing to its author (to save space)

Hi @tylah337 it seems that when I have connected the PCIE_X8_4 the bifurcation for the PCIE_X16/X8_3 fails as you have described. Then we could think that the PCIE_X8 that could be bifurcated is the slot 2 (PCIE_X8_2 as IOU1 PCIe Port)?

I guess that there is a controller that automatically change the PCIE_X16_3 to PCIE_X8_3 when PCIE_X8_4 is connected, so the last one is fixed and without the bifurcation available. Have you try a set up as I have mentioned an ideal set up for me? Bifurcating the PCIE_X16_1 into x4x4x4x4 and lets automatically set to PCIE_X8_3 and PCIE_X8_4 when using for two GPU SLI

@euchloedtj you can bifurcate freely the first and second slot in order to get a quad nvme software raid (2 nvme in each hyper M.2) for example, and the last 2 slots for the SLI GPUs. Thats what i tried to do but unfortunately the 2nd GPU wont fit to the last slot due to enormous waterblock unless i render useless all the usb 2.0 headers, which i need, but you can try something like this if you dont need them! But this is exactly why i need to bifurcate the last 2 slots for my use case. But yeah my guess is too that unless we can disable somehow the PLX chip, it will be impossible to do so, since it bifurcates the 3rd x16 to the 3rd x8 and 4th x8 on its own, overwritting the BIOS settings.

@tylah337 I have tried all possible combinations (I guess) for trying to get 4 NVMe using a HYPER M.2 connected to a PCIE_X16 without luck. I have tried bifurcating the two PCIE_X16 into x4x4x4x4 (and all other combinations) but only detect the socket 1 and 3, socket 2 and 4 are not recognized. For using the socket 1 and 2 I have to connect to the PCIE_X8_2 with biffurcation x4x4. Have you ever tried a full 4 socket NVMe drives into a PCIE_X16 (x4x4x4x4). @Lost_N_BIOS could be possible that bifurcating a PCIE_X16 into x4x4x4x4 are missing something?

Regarding to the PLX chip I am not sure if could exist some kind of hardware jumper for bypassing the auto configure behaviour. The thing is that retail BIOS does not allow the wonderful setup as the modded BIOS, XD.

thanks, its possible modded bios to support memory DDR3 ecc?

muar0, these boards support ECC memory based on CPU. ECC or Buffered dimms only work with Xeon CPUs for intel. The board cannot support buffered dimms but ECC dimms will work if you have a Xeon cpu. I am running an E5-1680V2 Cpu with 64GB of ECC dimms on a RIVE.

Hello All,

Late to this thread - I was looking to get a copy of the Asus RAMPAGE-IV-BLACK-EDITION-0801-Version-A-Birurcation mod to get my ASUS Hyper M.2 card to work with two NVMe’s.

Could someone please repost the Asus RAMPAGE-IV-BLACK-EDITION-0801-Version-A-Birurcation mod?

The two links provided by Lost_N_BIOS in post #13 are no longer working.

Thank you in advance!

Hey Lost_N_BIOS, looks like these mirrors stopped working, if you still have them, would you be able to reply with them being forum post attached so the mirrors don’t go down?

Some users r not active anymore… private sharing links r not recoverable, Lost has been away since January.

U can try reach other users using @username

[GUIDE] Adding Bifurcation Support to ASUS X79 UEFI BIOS

[Guide] - How to Bifurcate a PCI-E slot

support 16gb ecc? memory with e5-2697v2? this memory is 1.35v

my board is rampage 4 extreme