@Fernando @Lost_N_BIOS

Interesting results, I think you are correct about the in-use NVMe SSD models and the AMD RAID driver.

I just banged off the CDM benchmark results using the AMD RAIDXpert2 9.3.0.120 windoze drivers; hadn’t even loaded the 9.3.0.158 windoze drivers yet.

Notably, the Samsungs perform better when they have a larger size.

Samsung 960 EVO

Capacity Max sequential (MB/s)

250GB 3200 1500 (read, write)

500GB 3200 1800

1TB 3200 1900

In contrast the Samsung 970 PRO

Performance2)

SEQUENTIAL READ

Up to 3,500 MB/s

SEQUENTIAL WRITE

512 GB: Up to 2,300 MB/s

1,024 GB: Up to 2,700 MB/s

RANDOM READ (4KB, QD32)

512 GB: Up to 370,000 IOPS

1,024 GB: Up to 500,000 IOPS

Obviously, the larger the SSD the higher the throughput; and along with that the higher price!

Further to this, the correct configuration of the bios could also have a big impact; in particular lack of PCIe bifurcation of x4, x4, x4, x4 lanes within the bios settings could be deprecating your speed on the samsung 960 evo array on the X570 chipset. Meanwhile, the ROG Zenith Extreme has the PCIe bifurcation built into the stock bios so each NVMe SSD has exclusive access to x4 PCIe lanes each. Also when using the Asus M.2 add-in card there is a setting in the bios to grant each particular PCIe slot X16, x8x8, x4x4x4x4 settings for the PCIe add in slot chosen. If your X570 board doesn’t have built in PCIe bifurcation I’m sure @Lost_N_BIOS can make that happen in short order!

@hancor :

Thanks for you comments.

All you have written is correct and wellknown by me. By the way - I have long-term experience with NVIDIA nForce RAID systems since 2006 (look >here<, they called me “Easy Raider” there) and Intel RAID systems since 2011.

That was the reason why I tested yesterday the creation of an AMD NVMe RAID0 array. I just wanted to know how the creation of an AMD RAID array works and whether an AMD RAID0 array is better or worse performant than an Intel RAID0 array, if the exactly same RAID members are used.

Since I use my computer just for office works and not for the processing of very large files (Video encoding etc.), I don’t have the intention to replace my extremely fast Sabrent Rocket 4.0 by any RAID0 array.

Nevertheless I thank you for your comments and the tip regarding the PCIe bifurcation.

@Fernando

I prefer “Win-RAIDer” the “cutting edge” of bios modding!

appropriate in these viral times.

Yes the PCIe 4.0 drives will kick up some dust in performance.

But I knew when I got the ROG Zenith Extreme, that my daughter would be doing some video editing and my wife’s hobby is photography.

Also a brother in law that has a business that digitizes older films, photo editing so ganging 4 NMVe drives in RAID is my next project!

That should top out at a crazy 14000MB/s which should last well until PCIe 5.0 and PCIe 6.0 hit the streets for ‘everyman’!

I agree though if one has less demanding needs PCIe 4.0 NVMe should be plenty fast until they drown us all in 8k to 16k films, videos and photos.

Hi,

Have you guys tried benching soft-raid storage space performance vs Intel Raid? Like you I am getting sub-optimal performance with two M.2 cards raided together. It almost feels as if each card is running at 2X speed. Before I had soft-raided two Intel 750 400gb drives and got better performance than running off of the RST controller with two much faster drives. So I bet if you run your tests against MS storage space raid it will beat Intel raid easy.

Thanks

@davidm71

The main disadvantage of a Software RAID is, that it is not bootable.

That is the reason why I have never tested such RAID configuration.

What about a data drive. So its not bootable. Thats ok. Still curious.

@davidm71

Please try it and publish your results within this thread.

I am definitely tempted to test and compare MS storage against Intel raid motherboard solution. Though I have a couple of questions or rather wonder if in fact when raid is disabled on my Z590 board would each individual m.2 slot be still routed through the chipset where the DMI 4X link bottleneck exists or would each port be unleashed independent of the other at full 4X x 4X speeds.

The other question I have is that once I upgrade the 10th gen 10700K cpu to an 11700K cpu the DMI bandwidth on a Z590 is suppose to double and does that mean I will see a doubling in performance with Intel motherboard raid? Because in the manual it states that it “supports PCIe bandwidth bifurcation for RAID on CPU function” and “Only Intel SSDs can active Intel RAID on CPU function in Intel platform”.

So I think the later concern is only for when bifurcation is involved. Not only that wish someone would mod out this Intel only ssd’s supported BS. Anyhow my next step is to replace the cpu. Will save my benchmark scores before and after and post them.

Thanks

@davidm71

Since I have never tried to create an MS Software RAID, I cannot help you.

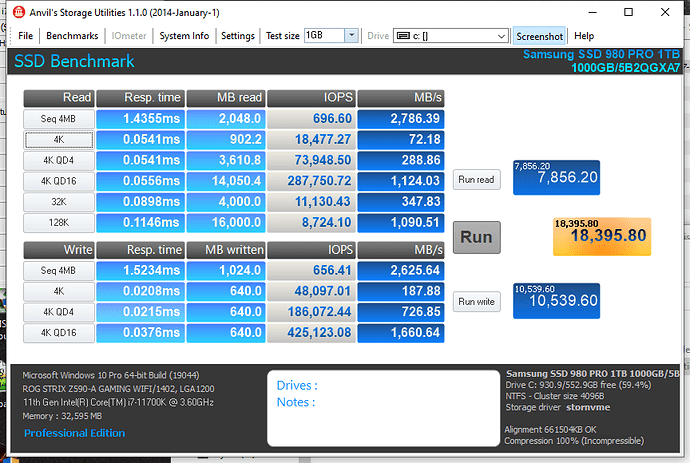

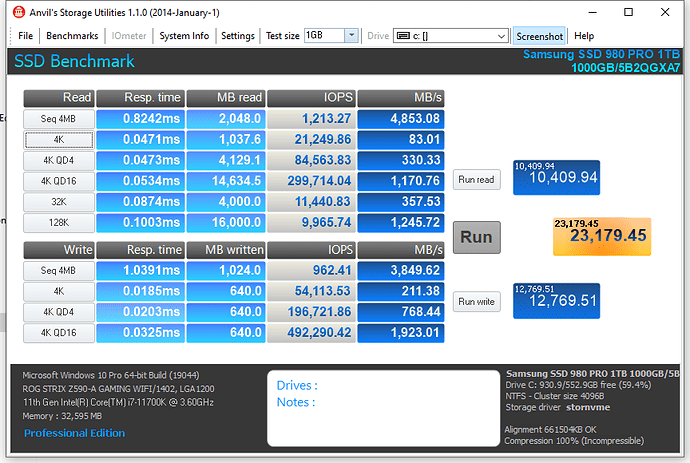

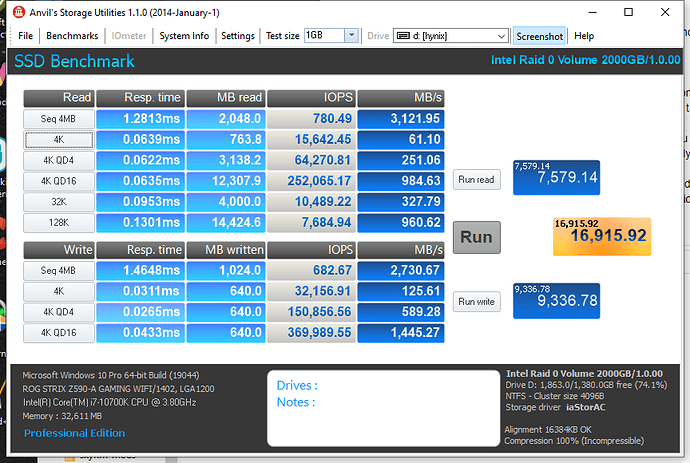

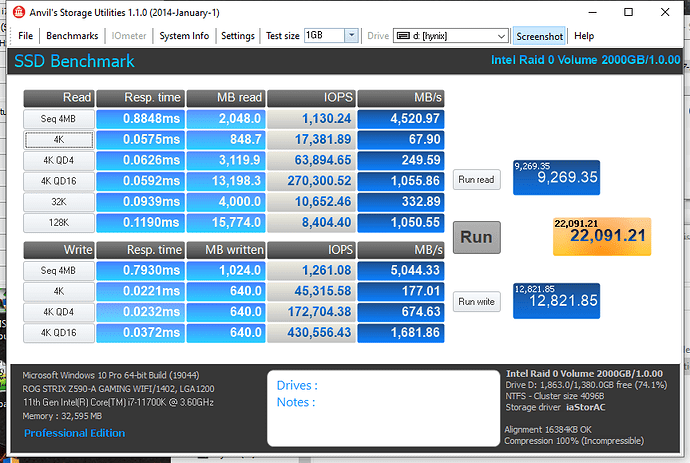

Have some benchmarks to share. First system configuration:

Asus Z590-A 10700K (old) 11700K (new)

Evga 3080 FTW3

GSkill 2x16gb 3200mhz ram

Samsung 980 Pro (single drive configuration)

SK Hynix P41 Gold 1tb x 2 in Raid 0 configuration

Intel RST driver 18.31.3.1036

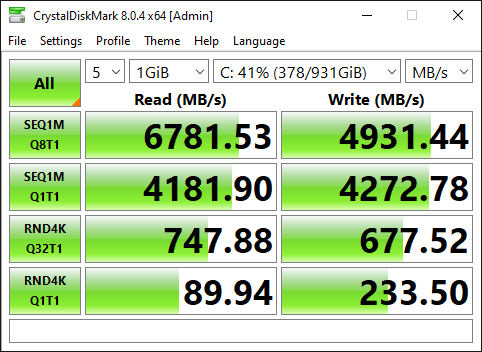

First score is of the Samsung 980 Pro running at PCI-e 3.0 speeds with 10700K:

Second image the Samsung 980 Pro running at Pci-e 4.0 speeds with 11700K:

Final Crystaldiskmark of 980 Pro running at PCI-e 4.0 speeds (forgot to save pci-e 3.0 image):

Now for interesting part of my tests which show doubling of DMI link affecting MB raid.

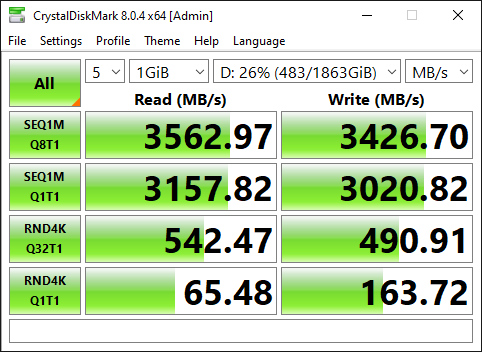

PCI-e 3.0 raid 0:

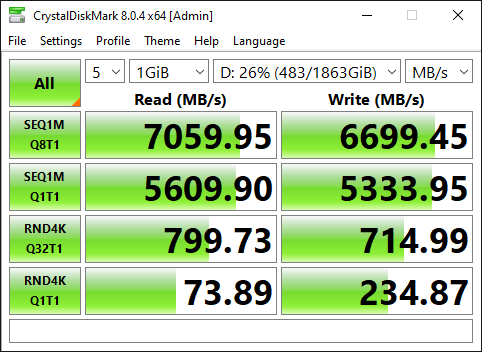

And after processor upgrade to PCI-e 4.0 11700k platform:

So according to this analysis with or without raid according to Anvil drive performance differed about 20 to 25 percent however sequential speeds doubled according to CrystaldiskMark. In anycase first time for breaking through the DMI 3.0 barrier with the raid controller. Pretty exciting!

@davidm71

Thanks for having done the benchmark tests and posted the results.

Questions:

- Why didn’t you use the currently latest v18 platform Intel RST RAID driver v18.37.6.1010 dated 09/19/2022?

- Did you realize the performance gain of the Intel RAID0 Array while doing the “normal” work with the PC?

- Do you plan any test with a “Software RAID” configuration?

- I made a mistake and wasn’t aware that I we were testing version 18.37.6.1010. Will retest and post results however can not go back to the old pci-e 3.0 processor. At very least will be comparison of the two driver versions.

- I do not understand your meaning. Did I realize performance gain while doing “normal” work? Not sure as have not had time to get a feel of the difference except that Windows does feel like it starts up much faster. Next I am going to test how fast it loads a heavily modded installation of Skyrim which takes extremely long. Was primary reason for upgrading system.

- At this point testing “Software Raid” is a waste of time and write cycles in my opinion as the Pci-e 4.0 DMI bandwidth negated any desire to try it out. Perhaps I will test it out with some spare SSDs in the future for curiosity sake.

Thank you

@davidm71

Thanks!

By the way:

- Your inserted Anvil pictures have been cut from a full desktop screen and don’t look perfect. If you want it easier with a better outfit, please have a look into >this< tip given by me a very long time ago.

- You can optimize the information for the viewers of your benchmark pictures by editing the most important information into the tool GUI before starting the test (Anvil’s tool and CrystalDiskMark offer such space for typing such information).

Actually I used the built in ‘save picture’ tool in each respective application. I noticed that too. Will do manual edits next time. Thank you.

Edit: About feeling real world performance increase I can now say that I do in fact feel a difference. Before it would take up to 30 seconds to load all the Skyrim mods. Now it takes like 5 seconds.