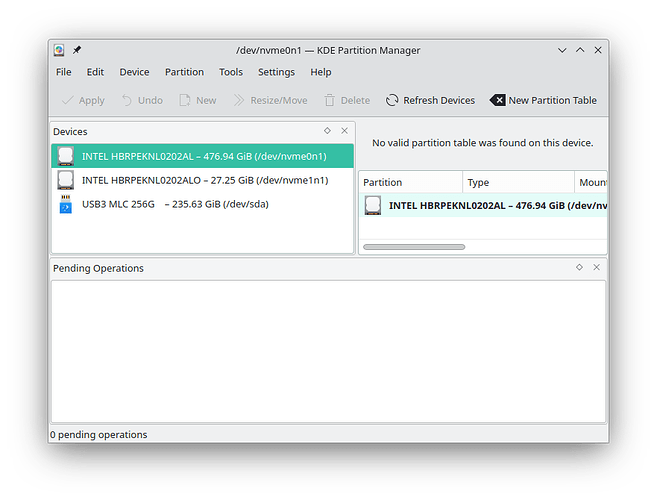

I have an Intel H20 to be exact, which is basically 32G Optane and 512G 670p packaged on a single PCB, and now I can only find one SSD in my OS, not two.

It seems that even the h100 is supported.

No surprise here…

Do not confuse EFI drivers (Bios module) and RST drivers (Windows).

Your initial doubt was related to EFI driver…

EDIT:

Keep “Fishing…”

Who told you that an Optane disk has to be shown in bios, you know this for sure? I dont…

Older gens of Optane were only set by the app/driver in Windows

Have you at least try to find out, the correct setup guidance, as it was meant for a 11th Gen cpu and platform… or you just want to keep “Fishing” into the air…

Since this is a situation regarding a huge difference compatibility on supported platform and device generation, i will not add or lose any more time with this, wait for other users, if you want to keep “digging” in this matter i suggest you make a new post correctly identified and addressed to the Intel H20 on B250 chipset or M710Q, all the best and good luck.

After replacing the RST module, the 32G Optane SSD still cannot be recognized.

This SSD does not have a PCIE-E switch, so the motherboard needs to have PCI-E splitting function, but B250 does not have this function.

I only have the old platform now, and I have checked these nvme devices in the OS. There is only one nvme device. I have conducted some tests on some motherboards that have completed supporting this SSD before. Basically, even if you don’t use RST to create RAID, you can still find two nvme SSDs in the OS. I found some information on another forum. In short, PCI-E splitting is needed, and at least the motherboard supports PCI-E splitting. The 11th generation Core you mentioned supports this type of SSD, It’s not surprising, as we all know, the new motherboard has much better support for PCI-E splitting.

Moreover, it seems that you don’t have H10/H20, which sounds like some kind of unfounded speculation.

I do plan to return this SSD.

Off course i do not have one, never told you i had one, i do have an old 1rst Gen 16Gb… but you can’t come to a forum assuming whoever is helping/tipping you, has for sure, the same issue or hardware models you have/want.

Thats why i finished my statement, saying that you should wait for other users.

Now the “Speculation”… you my friend can take it as you want and where you like it more… Over_n_Out.

Essentially, your Optane 16G should be M10. Besides the difference in flash memory chips compared to regular SSDs, there are not many distinctions. However, H10/H20 is a combination of QLC SSD and Optane, and in this case, the situation is entirely different. Intel designed this to enable Optane to act as a cache for QLC SSDs (achieved through software RAID like RST). Additionally, upon inspecting the PCB of this SSD, I did not find a PCIe switch chip. Considering that this is an OEM product typically paired with specific motherboards, it is quite normal to omit the PCIe switch chip from a cost-saving perspective.

As a side note, I actually have a 280G 900p which works perfectly fine on my AMD-based system.

I believe I have found the answer and there’s no need to waste more time. Typically, even so, I would instinctively post a reply as a form of documentation, aiding others in their search, ensuring that time is not wasted once this result is found.

Yes, you do that, the forum and the users will surely appreciate it, that is what a forum is all about…sharing and discussing it, and you have my thanks for the initiative if accomplished.

"INTEL OPTANE H20 COMPATIBILITY

The Intel H20 Optane Memory SSD will be available to oem manufacturers… It is only supported by Intel 11th Gen Core Series Processors with the Intel 500 Series Chipset and through use of the latest IRST 18.1 or later software release.

Even if you get your hands on an H20 module, you cannot simply throw it into a system, format it and believe your good.

As we mentioned, the H20 requires bifurcations where two PCIe 3.0 lanes feed into the Optane Memory chip while the other two feed into the Intel QLC NAND. …"

Same here, OEM, needs newer CPU capabilities to work properly and in addition the NVMe disks are early captured by by Intel VMD preOS firmware and no longer transparent to the OS, just to RST.

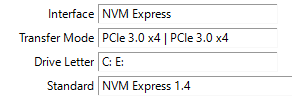

@Dark_iaji What does Crystaldiskinfo show for ‘Transfer Mode’?

That’s not true, Intel had submitted source to support RST on Linux. The code is available. The kernel developers just won’t accept it because they lose the ability to handle ssd quirks, and other concerns.

It’s not proprietary, it’s just bad.

Specifically:

RST is unsafe by design, and the kernel developers won’t support it.

No NVMe device power management

No NVMe reset support

No NVMe quirks based on PCI ID

No SR-IOV VFs

Reduced performance through a shared, legacy interrupt

CDI in fact does not display the transfer mode, which might be due to some peculiar malfunction.

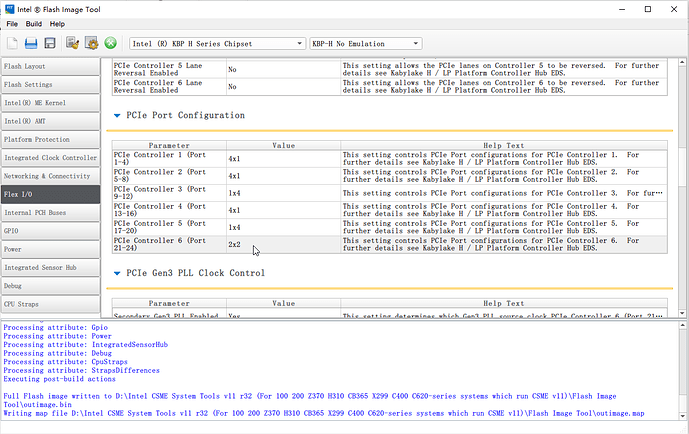

On a recent development, it turns out that the B250 can actually utilize H10/H20. The B250 PCH supports PCIe splitting, but it requires the use of the Intel Flash Image Tool to modify the split mode of PCIe controller 6 to 2*2.

In any case, for now, the problem seems to have been resolved. Actually, I am not particularly inclined to use RST; there are better options available for Linux such as Bcachefs.

As a side note, the SPI programmer made with the FTDI FT4232H Mini-Module is exceptionally efficient. It is significantly faster than the CH341A; I can flash the 16M BIOS of the M710q in just under half a minute.

Good… you found the FLEX i/o with FICT ![]() … a lot of settings on PCIe

… a lot of settings on PCIe ![]() …nice…let us know how it goes !!!

…nice…let us know how it goes !!!

Another issue is that only one SSD can be bootable. If intending to use Optane as a cache, this doesn’t seem to pose a problem, but if the intention is to install the OS onto Optane, it becomes a bit troublesome.

As you can see, manually adjusting the BIOS is necessary for PCIe splitting. I am unsure whether Setup_ver can simplify this process.

So by configuring the corresponding port to 2x2 you’ll achieve PCIe 3.0 x 2 for each of the disks, that’s about 1.9 GB/s?

Doesn’t look too attractive to me, what’s the reason you’ve chosen this configuration?

I am more intrigued by the amalgamation of this SSD with Optane itself, not Intel’s QLC, which doesn’t hold much appeal. If you ask me to find a reason for using this thing, all I can say is, isn’t it cooler than a regular SSD?

Well, therein lies the issue with the Optane product line: it’s just too expensive. Small-capacity Optane drives aren’t particularly useful, and it’s hard to justify using up a precious M.2 slot for a 16GB/32GB Optane drive. In normal circumstances, Optane truly doesn’t serve much purpose; otherwise, Intel and Micron wouldn’t be divesting themselves of Optane.

Honestly, I’d prefer a SSD with larger DRAM cache connected with x4 PCIe link.

I just like collecting all kinds of freaks.

I wanted to ask something else, I would like to replace the RaidOrom.bin file from version 17.8.0.4507 to version 17.8.4.4671, but the older version comes from this website

only this file from version 17.8.0.4507 is located in the src/tools/legacy directory and RaidOrom is in the .ffs and .bin extensions. The question is whether I just replace this .bin with a newer version and in this case it will be OK and whether it will be necessary also replace the .ffs file and is it a good idea to update RaidOrom.bin for acer aspire f5-573G I just want to patch the EFI RaidDriver vulnerabilities

@martin4562

First of all, this has no impact on systems that are not RAID configured, no impact on standard AHCI, NVMe system disks.

We don’t know the source and for what system that flasher is designed…

The bin file is an OpRom, nothing to do with an EFI driver (DXE), the ffs file.

On a non-RAID system, the bios will load the standard EFI AHCI/SATA driver (DXE), only using RAID the OpRom will be invoked.

Both require to be replaced in bios region image of the system… a mod and as a mod the risks that carries to any user and his system.

EDIT: Correct. And original bios restores the module.

My advice is, the risk of a broken laptop isn’t worth it.

As for the laptop, it is configured in AHCI mode and I cannot change it in the BIOS because the manufacturer has blocked this option, so if I replace this file as I wrote earlier, nothing will probably happen.

If something went wrong, let’s say with updating the EFI driver to a newer one, can it be restored to the original BIOS version or will the entire module have to be replaced?

Today (05/31/2024) I have updated the start post of this thread.

Changelog:

- New: “Pure” Intel-RST EFI VmdDriver v20.1.0.5751 (without header)

(thanks to Pacman for the source package) - Renamed: “Pure” Intel RST EFI VmdDriver v19.5.6.5738 (now named “VmdDriver”)