Thank you for your response and clarification ![]()

Gentle Fernando,

thank you for this thread, it helps to find the right driver (intel p600) for my windows.

But i have an issue, windows 7 dvd install can’t allow me to load driver because it’s not “signed”.

i already try to press F8 key while the dvd starts from bios and choose “disable driver signature enforcement” but nothing changes.

Did you or someone have a suggestion ?

Many thanks

Paolo

@antimodes :

Welcome to the Win-RAID Forum!

Which specific driver (manufacturer/sort/version) did you try to load at the beginning of the Win7 installation?

Regards

Dieter (alias Fernando)

Gentle Dieter,

thank you for your fast reply, really appreciate it!

In according with first post of this thread, I got this one: “64bit Intel NVMe Driver v4.4.0.1003 mod+signed by me” because it worked under another hard disk (not nvme ssd) with windows already installed.

Excited about that, i decide to install windows 7 onto nvme ssd by loading that driver but unfortunately it show me a message i can’t load these driver because it’s unsigned :<br />

Sooooooo do i create a custom dvd windows 7 to solve that problem ?

Many thanks!

@antimodes :

Yes, you have to integrate the MS NVMe Hotfix (download link is within the second post of this thread) into the boot.wim and install.wim of the Win7 Image.

By the way:

1. The "64bit Intel NVMe Driver v4.4.0.1003 mod+signed by me" has been correctly digitally signed by me, but the OS Setup cannot verify the trustworthyness of the signature at the early installation stage.

2. None of the Intel NVMe drivers, which have been modified by me, do support non-NVMe SSDs.

Hi there

So I’ve been getting a DPC_WATCHDOG_VIOLATION BSOD daily on my laptop (MSI GE75 Raider 8SE) when it is under gaming strain. I’ve researched a lot and absolutely tried everything possible including factory reset, different versions of drivers, etc.

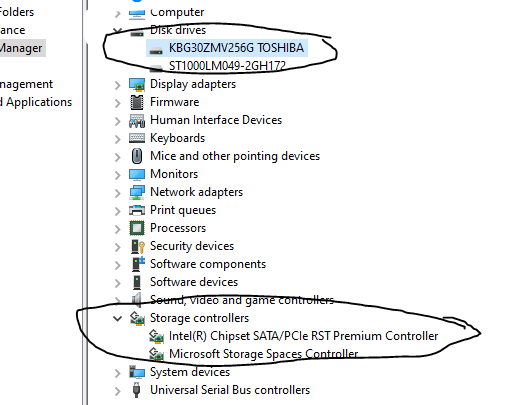

When running various debugging I think I narrowed it down and I can clearly see it’s my Toshiba KBG30ZMV256G SSD acting up, only the disk jumps to 100% resource usage out of nowhere (rest of the system stable) and then just plateaus for about 15 seconds and then BSOD appears.

The reason I’m posting here is because I have a feeling that it might be because the storage controllers being used on default by the laptop isn’t fit to handle the Toshiba SSD. I came here looking for NVME drivers, but those linked on the Toshiba section seem a bit old, or would they be worth a try? But I know this could probably just plainly be a hardware problem.

I’ve attached my device manager for reference to the storage controller and driver being used.

Hi, @Fernando !

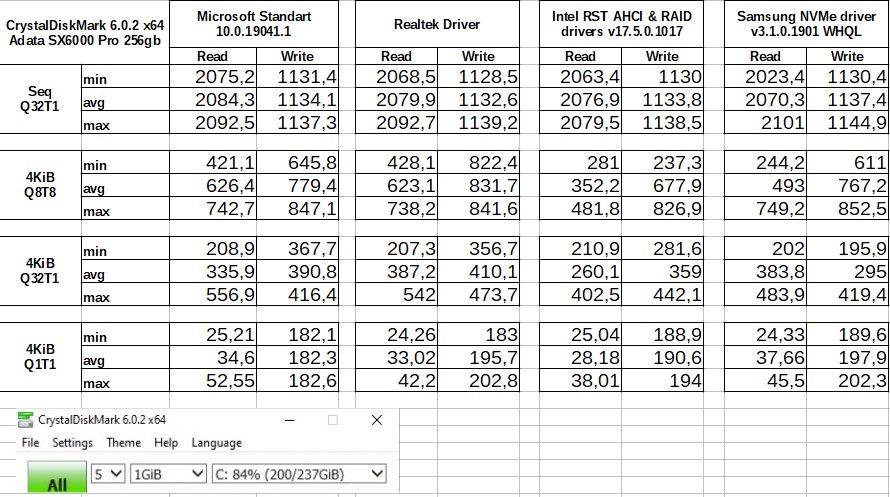

Thanks for your NVMe drivers, i use it many times while have Intel 660p… but after replace to Adata SX6000 pro (Realtek RTS5763DL controller) i had some problems, because i can’t find original (2 years or more, sometime tryed to find it…), but nevermind

And now it’s done - today i found it

Compatible devices:

PCI\VEN_10EC&DEV_5760

PCI\VEN_10EC&DEV_5761

PCI\VEN_10EC&DEV_5762 (Adata SX6000 pro)

PCI\VEN_10EC&DEV_5763

PCI\VEN_10EC&DEV_5765

PCI\VEN_10EC&DEV_5766

Hope it will be usefull for your NVMe drivers collection

@back37 :

Welcome to the Win-RAID Forum and thanks for the 64bit Realtek NVMe driver.

Have you already tried any other NVMe driver and compared it with the Realtek one?

The mod+signed generic Samsung NVMe driver v3.3.0.2003 may work fine with your ADATA NVMe SSD.

Enjoy the Forum!

@all:

Update of the start post

Changelog:

- Realtek NVMe drivers:

- new: 64bit Realtek NVMe driver v1.4.1.0 for Win7-10 x64 dated 07/19/2018

Good luck with this Realtek NVMe driver!

Dieter (alias Fernando)

@Fernando

I tested Samsung driver before and in daily sometime drive had too small performance, like stucks or small speed

[[File:??? 2020-07-02 001837.jpg|none|auto]]

That’s a big NO-NO! The drive has no time for GC, TRIM and emptying SLC cache…

You have to manually TRIM and wait for quite a bit (1h+) to test again. And Adata (and other drives) are notorious for not emptying the SLC cache even after TRIM command!

@MDM

Ok, thanks… i will try again later, then will have enough time… if i don’t forget

Is there Intel NVMe v6.2.0.1234 driver now available to replace v5.5.0.1360 ?

Dumo application do suggest it but I don’t see him somewhere yet.

@100PIER :

Within the next 2 hours I will offer the 64bit Intel NVMe driver v6.3.0.1022 WHQL for Win8-10 x64, which does support many, but not all Intel SSDs.

If there should be interest, I can add a mod+signed variant, which may support all Intel SSDs.

Update of the start post

Changelog:

- Intel NVMe drivers:

- new: 64bit Intel NVMe driver v6.3.0.1022 WHQL for Win8-10 x64 dated 01/13/2020

- new: Intel RSTe Storage Drivers & Software Set v6.3.0.1031 for Win7-10 x64 dated 02/13/2020

- new: 64bit Intel NVMe Driver v6.3.0.1022 mod+signed by me on 07/06/2020

Good luck with these new Intel NVMe drivers!

Dieter (alias Fernando)

I picked up several Toshiba XG6 NVMe drives at a good price. These are OEM drives. I’m using them on Supermicro AOC-SLG3-2M2 PCIe adapters that each hold 2 M.2 drives.

My motherboard is a Supermicro X10-SRL-F that supports bifurcation. While benchmarks for a single drive gives the expected performance, running 2,4 or 8 of these XG6 in a striped volume, the performance is no where near 2,4 or 8 times that of a single unit.

So I’m thinking it is a driver issue. I’m running Windows 10 Pro and the Standard NVM Express Controller is dated 20-Jun-2006 (version 10.0.18362.693).

I downloaded the OCX/Toshiba NVMe driver from the first page in this thread (v1.2.126.843 WHQL), but when I attempt to update the drivers from Device Manager, it tells that that the best driver is already installed.

Any suggestions on how I might be able to update my ancient drivers to a newer version?

Thanks!

@crazydane :

Welcome to the Win-RAID Forum!

Here is my comment:

- All Win10 in-box MS drivers are shown as being dated 21.06.2006, but they are as new as the OS. Look at the version of the driver and the Build version of your OS.

- It is an illusion to believe, that the user can quadruplicate the performance by creating a RAID0 array consisting of 4 NVMe SSDs. You may get the maximal speed by running 2 or 3 SSDs as RAID0 members. The bottleneck of the data transfer speed is the chipset.

- When the Device Manager gives you the message, that “the best driver is already installed”, you can get the desired and definitively compatible driver installed nevertheless by using the “Have Disk” option.

Questions:

1. Which are the HardwareIDs of the currently in-use NVMe Controller? You get them by doing a right-click onto it and choosing the options “Properties” > “Details” > “Property” > “HardwareIDs”.

2. How did you create the RAID array?

3. Have you already tried to create an Intel RSTe RAID0 array from within the BIOS?

Regards

Dieter (alias Fernando)

@crazydane : To follow what @Fernando told, you would need a dedicated RAID Card for that…

@Fernando

First a little more background. The NVMe units I’m using are all PCIe Gen 3.0 4x units, so each one has 4 PCIe 3.0 lanes directly to the CPU (E5-2683 v3 14 core). I was able to accomplish this by enabling bifurcation in the BIOS, which basically sets ports 2 and 3 on the CPU to use x4x4x4x4 instead of x8x8 or x16. So the chipset is not involved at all as I have a directly 4x PCIe path to the CPU for each M.2 device.

Each NVMe has a dedicated entry in device manager under Storage controllers. The hardware Ids are the same for all 4 controllers, and they are:

PCI\VEN_1179&DEV_011A&SUBSYS_00011179&REV_00

PCI\VEN_1179&DEV_011A&SUBSYS_00011179

PCI\VEN_1179&DEV_011A&CC_010802

PCI\VEN_1179&DEV_011A&CC_0108

If I look in HWiNFO, I see the following for each NVMe unit:

PCIe x4 Bus #3 - Toshiba AIS, Device ID: 011A

PCIe x4 Bus #4 - Toshiba AIS, Device ID: 011A

PCIe x4 Bus #5 - Toshiba AIS, Device ID: 011A

PCIe x4 Bus #6 - Toshiba AIS, Device ID: 011A

HWiNFO also confirms that each NVMe controller has a 4x link width with a bandwidth of 8.0 GT/s each (PCIe 3.0).

I created my test arrays simply by setting up a striped volume using Disk Manager with 2, 4 or 8 NVMe devices.

The dual NVMe to PCIe adapters I’m using do have an option to change the SMBus address for each card. So let me try setting them all to different addresses to see if that will address the issue.

ASRock makes a Quad M.2 Card 16-lane NVMe to PCIe adapter that based on reviews I have been, it is definitely possible to get close to 4x the performance compared to a single NVMe. One review I saw, was getting reads of 12,031 MB/s, which is 4x the performance of a single NVMe. So far the best I’ve been able to get is 6,3543 MB/s on reads and 4,853 on writes, so I’m leaving a lot on the table and I’m trying to determine root cause.

I’ll try changing the hardware id (SMBus address?) on one of the adapter cards to see what happens and report back.

That is software RAID, so the CPU does all of the work, you can not expect it to "just" work optimally. So like I said, you would need a dedicated RAID Card for that, that does the processing instead of the CPU…

But in a striped array (RAID0) there is no parity calculation, so I don’t believe it would put any load on the CPU at all. If I was running RAID5 or 6, I would agree that a dedicated RAID card would be the way to go.

I in fact do that for my media server, where I run an Areca 1882 card connected to a SAS2 backplane expander with 24 drives on it configured as a RAID60 (2x12 RAID6 arrays striped together).

Based on reviews I have read, a windows striped volume is just as fast as a RAID0 setup in BIOS using Intel’s RSTe.

This is all just for testing and getting any hardware issues I have sorted out. My goal is to deploy several of these as nodes in a Storage Spaces Direct cluster that will be all NVMe.