I built an HTPC off of an Asus P8H77-i motherboard. It has dual 60 GB SSDs in RAID1 and 4x 4TB WD Reds in R5. About 6 months ago, I was able to get 600+ MB/s writes on RAID1 and 350+ on RAID5.

However, for some reason I can’t track down, now the C drive R1 reports write speeds of 30-40 MB/s and the RAID5 at 70 MB/s. I’ve played with cache settings in Device Manager and RST and I’ve tried various RST drivers from 10.x, 11.x, 12.x and 13.x branches.

I’m running Windows Server 2012 R2 on it. I’ve exhausted everything trying to chase this down. Both R1 and R5 write performance has fallen way down, and surprisingly the SSD R1 is even slower than HDD R5. Using CrystalDisk for performance, I’ve also had issues copying files between the C and D drives and vice-versa. The performance is very surgey where it goes fast then to 0, then fast again.

Any advice would be appreciated.

@ BrianV:

Welcome at WinRAID Forum!

It is normal, that the performance of a RAID array is the best after its creation onto new or secure erased drives, but in your case the speed loss is too extreme to be easily explained.

Questions:

- Which sort of SSDs are you using?

- How full are your RAID arrays, that means how much free space is left?

- Did you change any BIOS settings or install a program, which may have an impact on the performance?

- Which non-OS programs are running in the background (run the “Startup” tab of the Task Manager)?

Regards

Fernando

Fernando,

Thanks for the assistance, I must have spent two hours on your site last night.

- For SSD, I am using 2 Sandisk “Standard” 64 GB SSDs

- For capcity, I am using 23/60 GB, so it is mostly empty

- I didn’t change any BIOS settings, but after I identified the issue, I did update my BIOS since a newer one was released but it didn’t fix anything

- I’ve tried with most items shut off. Basically it is a HTPC so it has Plex, FTP Server (Filezilla), a cloud Backup System, Dropbox, uTorrent and others on it. Since it is Windows Server, there is no Startup tab as things are managed by Services, but I can show you that with pretty much every end-user app disabled/killed, it still doesn’t impact performance at all.

I did shut down everything and ran CrystalDisk, just using 50MB since it’s fast, but the performance gets worse with larger transfers. Here is a screenshot which has most usable information:

https://dl.dropboxusercontent.com/u/112537/SSD.png

The best I’ve seen is 710 down and 68 up, but when new, I was getting 900+ down and 400 up.

After I posted, i even broke the RAID intentionally and tested each SSD in degraded alone, the performance was about the same (read was a little lower), but write was stuck at ~30-50 MB/s. I had hoped to degrade the array, convert to normal single disk mode, secure-erase the removed SSD, reattach it and make RAID 1, then break the other one, secure-erase, etc. However, I couldn’t find in RST how to convert a degraded R1 array to normal single drive mode.

My D drive which is 4 4 TB HDDs does 400-600 MB/s read and 70-100 MB/s write. It used to write at 400 MB/s + when new. Finally, this is Windows Server 2012 R2, so I’m mostly using Win 8.1 64-bit drivers, but it has worked in the past.

Also, here is a link to CrystalDisk which shows SSD (both SSD report the exact same figures except power on count as for some short time on another system I used one SSD alone):

https://dl.dropboxusercontent.com/u/112537/crystal.png

Trim, NCQ, everything is running. In Disk Optimization I did manually trim it yesterday with no help.

It also shows device manager.

@ BrianV:

Thanks for your having answered my questions and for youradditional reports.

Until now I don’t have any idea regarding the origin of your performance loss. There seems to be a problem, which affects all drives. An SSD/HDD issue can be excluded, because this doesn’t happen with all of them at the same time.

Actually I suspect either a program, whch is running in the background and has a strong negative impact on the lets the performance (olease check the entries within the “Srartup” tab of the Task Manager) or a hardware problem (what about the PSU?).

Thanks. It is a Windows Server so it doesn’t have a Startup Tab. Server handles startup via services only. I did go into Device Manager and killed just about every non-essential running process without success. Let me look at it again.

And what about the msconfig command?

Another idea:

Please run the Device Manager, right click onto your Disk Drives - one after the other - and choose the options "Properties" > "Policies". What do you see? Which write caching options have been checked?

MSCONFIG also doesn’t show Startup, it just simply isn’t something Server supports, but I did disable all unnecessary services and am doing a Minimal Boot.

As far as Device Manager, I have tried every combination of Cache and Flushing. It seems I can get into 70-80 MB/s by having the first box checked but not flushing.

I booted into Safe Mode-Minimal which means no services are running, not even networking. It i the most basic Windows boot environment. The performance is the same, so unfortunately I think it is not related to another program.

I fear my only other method to investigate is to rebuild, but I really don’t want to do that. I will try one more thing. I have a USB 3.0 SSD drive, I can see if it performs ok on various machines. I’ll report back.

So I used a USB 3.0 SSD drive which regularly does over 250 MB/s, it worked fine on this machine over USB:

https://dl.dropboxusercontent.com/u/112537/USBSSD.png

So it seems performance issue is limited to SATA side and not something inside the system. CPU and Memory utilization are very low on this system. This is why I initially started chasing the IAStorA.sys drivers and found your page. There is something wrong on the Intel side of things, I’m convinced.

Also, I have installed now 4 or 5 different Intel drivers. Is there a good way to clean that out and maybe start fresh, just make sure my drivers are installed absolutely right. For Intel chipset I did an -overall flag because I was stuck with all of these default Microsoft-2006 drivers.

Which ones?

Usually there is no problem to change the in-use Intel RAID driver version. Only exception: A "downgrade" from an Intel RST(e) driver named iaStorA.sys (v11.5 or higher), which is using an additional SCSI filter driver named iaStorF.sys, to a "classical" RST driver named iaStor.sys (latest version: v11.2.0.1006.

For details you may read >this< article.

EDIT:

Although this has nothing to do with your performance issues, it was not a good idea to force the installation of unneeded or unusable INF files by running the installer of the Intel Chipset Device Software with the -overall command extension. The users, who are doing that, will not get any benefit for their system, but definitively a blown-up registry and a Windows\Inf folder full of garbage.

Thanks, for the -overall, for whatever reason I could only get Microsoft provided Intel 7-Series drivers in Device Manager. When I would run the INF installer, it would just go immediately to “Finished”. In my case, now I have Intel provided drivers.

Intel emailed me back about my report and said I need to trim each drive outside of the RAID array which is a major pain. I don’t have any more SATA ports on this rig so I probably need to remove the SSDs and take them to my other desktop ![]()

FYI: The Intel Chipset Device Software doesn’t contain any drivers (drivers are .SYS files). So you didn’t get any Intel driver installed, but just (unneeded) Intel information files with the extension .INF.

My comment:

1. HDDs do not support the TRIM command.

2. A secure erasure of the SSDs would be easier and better.

I solved the issue (I think).

Intel was right, and further research seems to elude to that RST doesn’t TRIM RAID1 at all (although RAID0 it does). What I did was:

1. Removed one SSD from that system and put it into my desktop

2. Ran CrystalDisk on that SSD as is in the new system, got 40 MB/s write

3. Used SanDisk SSD Dashboard to TRIM that SSD

4. Re-ran CrystalDisk and immediate got 164 MB/s write

After that, I sanitized the disk and put it back in, rebuilt the RAID array (which took only like 2 minutes), then removed the other drive, TRIM’d, sanitized and am now rebuilding it again. So the issue is related to RST especially in RAID1 where it never TRIMs.

@ BrianV:

Thanks for your interesting report about how you solved the performance problem. This verifies the importance of the TRIM support, especially for SSDs with an unsufficient Garbage Collection.

By the way: Didn’t you know, that the TRIM command cannot pass through the Intel RAID Controller into a RAID1 array?

Another question:

What about your RAID5 members? TRIM doesn’t matter for HDDs, but what was the reason for their drastical performance drop?

Well there is unreliable info about TRIM passing through with RAID0 and maybe RST Enterprise now allows TRIM passthrough.

For RAID5, it’s performance wasn’t as bad, I can get over 100 MB/s write. I am doing a defrag and we will see. My device is write-once read-many on the R5 array so I don’t mind if write performance isn’t superb.

I’ve read trim is not available for raid1 with RST and SSD, possible the drives are not doing their own garbage collection and the cells are filling up?

Trim does pass through with RST and raid0, this has been confirmed by Intel quite a few versions ago.

It also would appear that latest gen like 850 evo and mx100 you lose about 10% bandwidth without trim and those models have their own garbage collection.

That should keep them in top performance.

I currently run raid1 on raptors but would love to update to Samsung 850’s, just not keen on the whole trim issue.

I’ve reconfigured my system to not download to the desktop and really limit my unnecessary write/delete on the SSD array. Until Intel allows this pass-through, I wouldn’t recommend this approach. I’ll probably quarterly trim the SSDs manually and hope Intel provides this functionality. Fortunately, this is an HTPC and C_Drive write performance isn’t a production issue, just an annoyance.

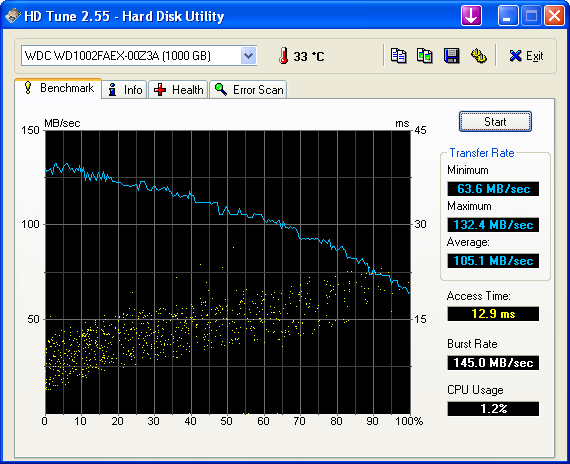

I don’t want to veer off topic, but I’m getting relatively low burst speed with my ICH8 and the recommended 11.2.0.1006/11.2.0.1527 driver/bios:

Is it necessary to install Intel Matrix Sotrage Manager and enable write-back cache?

Yes, if you want to enable the Write-Back Caching feature, I recommend to install the modded "Universal Intel RST Drivers and Software Set v11.2.0.1006. Note: Before you run the installer, you have to make sure, that the required .NET Framework v3.5 is installed.

After the next reboot you should run the Console software v11.2.0.1006 and enable the Write-Back Caching. If you want, you can uninstall the RST software - the Write-Back Caching setting will stay forever (unless you break the RAID array or do a serure erase of the RAID members).